Artificial neural networks become more powerful

An international team of scientists at the TU Eindhoven, the University of Texas at Austin (US) and the University of Derby (UK) have developed a revolutionary method that speeds up the algorithms for artificial intelligence (AI) quadratically. This makes AI available on cheaper computers, and would enable supercomputers, in one to two years, to utilise artificial neural networks that exceed the capabilities of present neural networks quadratically. The researchers have published their method in the journal Nature Communications.

Frog brain

Artificial neural circuits (neural networks) form that basis of the AI revolution, which at this moment is shaping every aspect of society and technology. But the artificial neural networks as they exist today are still very far from solving very complicated problems. The latest supercomputers struggle with a network of 16 million neurons (about the size of a frog's brain), while it takes nearly two weeks before a powerful desktop computer can train a network of 100,000 neurons.

Biological networks

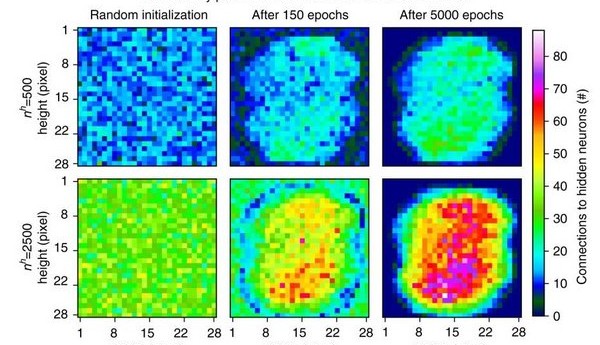

The proposed method, Sparse Evolutionary Training (SET), receives inspiration from biological networks that obtain their efficiency thanks to three simple functions: networks have relatively few connections, only a few hubs and short paths. The work published in Nature Communications demonstrates the advantages of moving away from fully connected neural networks through the introduction of a new training procedure that starts with an arbitrary network with few connections and iteratively evolves into a scale-free system. At each step the weaker links are removed and new links are randomly added, comparable to a biological process that is known as synaptic shrinking.

Speed gain

The remarkable gain in speed of this method is of great significance, as it allows the application of AI to problems that are currently not solvable because of their enormous number of parameters. Examples are affordable personalised medicine and complex systems.

Laptop

Essentially, anyone with SET on their laptop could now build an artificial neural network with up to 1 million nodes, while with existing methods this was limited to expensive computing clouds. This, however, does not mean that these clouds are no longer of use. Currently, the largest artificial neural networks, built on supercomputers, have the size of a frog's brain (about 16 million nodes). After overcoming a few technical hurdles, in a few years it will be possible with SET to use these same supercomputers to build neural networks that approach the capabilities of the human brain (about 80 billion neurons).