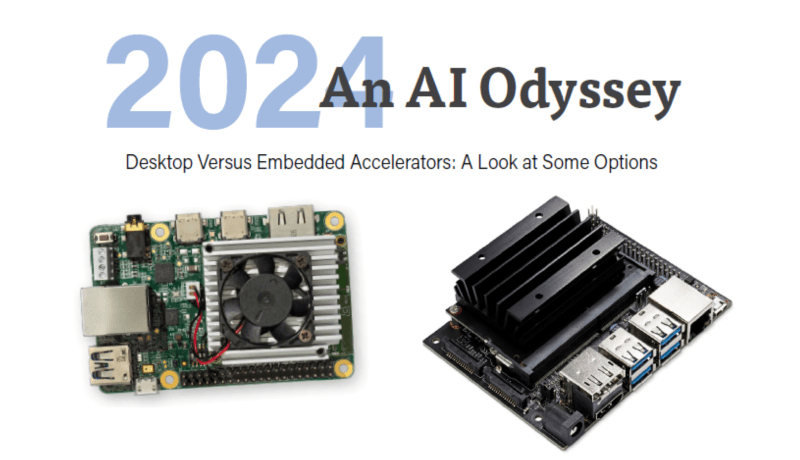

AI Accelerators: Desktop Versus Embedded

on

Artificial intelligence (AI) is evolving rapidly, and choosing the right edge AI accelerator is crucial. Let’s highlight a few key AI accelerators, comparing their features and performance, to help us make an informed decision for your specific AI needs.

The Need for AI Accelerators

Why do we need AI accelerators? Surely, being Turing complete, any CPU or microcontroller could perform exactly the calculations that an AI accelerator could. However, general-purpose CPUs and microcontrollers are designed to handle a broad range of tasks, but may not be very efficient for specific AI-related computations.

An AI accelerator is a specialized hardware component designed to speed up artificial intelligence tasks, primarily focusing on the inference phase where AI models make predictions based on new data. Unlike general-purpose processors, AI accelerators are optimized for performing large-scale matrix operations and parallel processing, which are essential for tasks like image recognition, natural language processing, and other machine learning applications. This makes them significantly faster and more efficient at handling these specific calculations compared to standard CPUs.

As AI continues to advance, edge computing — processing data locally on devices rather than relying on centralized cloud servers — has gained prominence. This shift enhances real-time responsiveness, data privacy, and overall efficiency. To support these needs, various edge AI accelerators have been developed for both embedded systems and PCs.

Choosing the right tool for your specific needs can be challenging, whether you require high-powered solutions for complex tasks or lightweight devices for real-time field operations. Let’s look at an overview of some of the leading edge AI accelerators, highlighting their features, use cases, and performance.

Why This Comparison?

I often need to process vast amounts of data for applications such as speech-to-text transcription (e.g., using Whisper ) and automated video editing and classification of archival video captures. For these tasks, on-PC solutions with powerful GPUs such as my NVIDIA RTX 4070 are indispensable for their computational power and flexibility. Conversely, for real-time detection tasks in the field or in industrial environments, embedded solutions such as the Raspberry Pi AI Kit or Coral Dev Board are more practical, given their compact size and lower power requirements. This comparative study aims to help identify the best tools for different scenarios.

Embedded Edge AI Accelerators

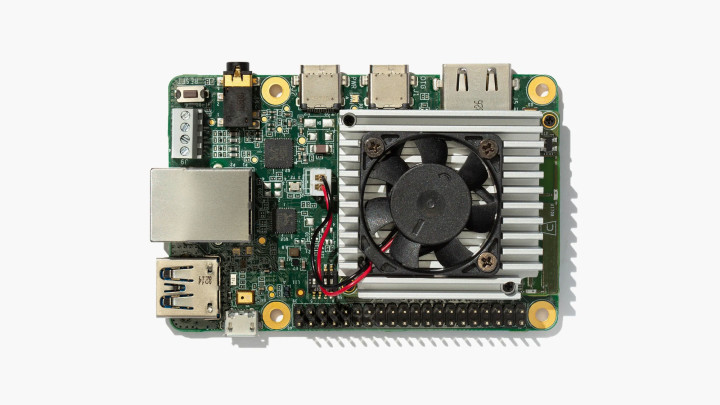

Raspberry Pi AI Kit with Hailo-8L Integrated

Introduced recently, the Raspberry Pi AI Kit brings the Hailo-8L AI accelerator to the Raspberry Pi 5, making powerful AI capabilities possible in a low-cost, compact form factor. It’s an affordable solution for adding AI to various Raspberry Pi projects.

The Hailo-8L AI accelerator plugs into the M.2 socket on the included Raspberry Pi M.2 HAT+. Seen in its place in Figure 1, it offers 13 tera-operations per second (TOPS), making it suitable for tasks such as real-time object detection, semantic segmentation, pose estimation for recognizing gestures, and facial landmarking. The kit’s low power consumption is ideal for battery-powered applications and its compact size integrates well with official Raspberry Pi camera accessories. While it’s not as powerful as some high-end NPUs, it’s perfect for lightweight AI tasks, educational projects, and hobbyist applications.

Coral Dev Board

The Coral Dev Board, introduced in 2019, is designed to run TensorFlow Lite models efficiently as a stand-alone solution. In a Raspberry Pi-esque form factor (Figure 2), it features an Edge TPU coprocessor delivering 4 TOPS. It’s excellent for image classification, object detection, and speech recognition, making it ideal for IoT applications. Its low power consumption is a significant advantage, especially for embedded systems. However, it’s primarily limited to TensorFlow Lite models and offers less computational power compared to some other accelerators. Despite this, it seamlessly integrates with Google Cloud services and provides efficient performance for basic AI applications in embedded systems.

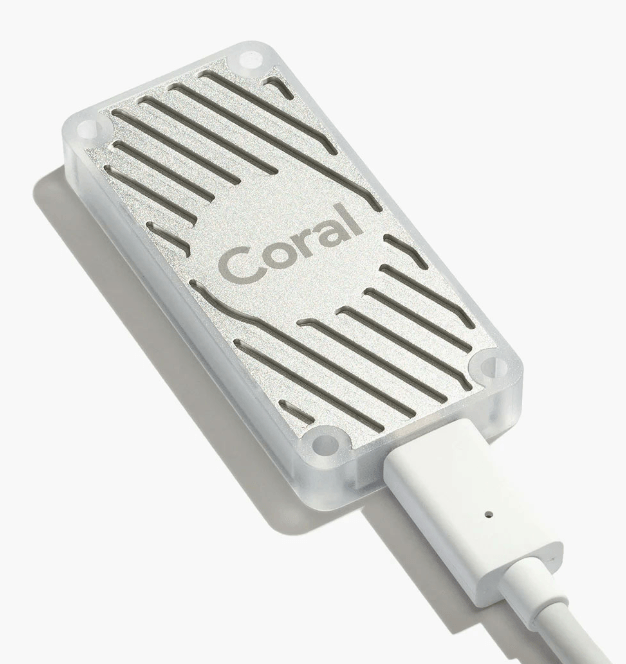

Coral USB Accelerator

If you already have your computing platform decided on and need to bring in some external AI muscle, there’s the Coral USB Accelerator. This compact device, roughly the size of a standard USB stick (Figure 3), brings the power of Google’s Edge TPU to any compatible computer via a USB 3.0 connection. It also delivers 4 TOPS of performance while consuming only 2 W of power. The USB Accelerator is ideal for developers looking to add AI capabilities to existing systems or prototype edge AI applications without committing to a full development board. It excels in tasks such as image classification and object detection, making it a versatile tool for AI experimentation and small-scale deployments.

NVIDIA Jetson

NVIDIA’s Jetson line is a series of embedded computing boards designed for AI applications at the edge. This ecosystem includes several products catering to different performance needs and use cases:

- Jetson Nano: The entry-level option, suitable for smaller AI projects and prototyping.

- Jetson Xavier NX: A mid-range option offering significantly higher performance than the Nano.

- Jetson AGX Xavier: A high-performance module for demanding AI applications.

- Jetson AGX Orin: The latest and most powerful in the lineup, designed for advanced robotics and autonomous machines.

These modules vary in their computational power, ranging from 0.5 TOPS for the Nano to over 200 TOPS for the AGX Orin.

All Jetson devices run on NVIDIA’s JetPack SDK, which includes libraries for deep learning, computer vision, accelerated computing, and multimedia processing. This common software environment allows for easier scaling and deployment across the Jetson family.

The Jetson line is well-suited to a variety of applications including robotics, drones, intelligent video analytics, and portable medical devices. Its scalability makes it a versatile choice for businesses looking to develop and deploy AI applications across different performance tiers.

The entry-level Jetson Nano (Figure 4), launched in 2019, brought the company’s AI computing capabilities to small, affordable form factors. It features a 128-core Maxwell GPU, a quad-core ARM Cortex-A57 CPU, and 4 GB of RAM, delivering 472 GFLOPS (0.47 TOPS). The Jetson Nano is well-suited for small but demanding AI tasks in embedded systems. It can handle multiple neural networks simultaneously, making it a flexible option for various AI applications. However, its power consumption is higher than other embedded options, and it has a larger form factor.

The Nano is an AI module with a 260-pin edge connector, so it’s not feasible to use it stand-alone without plugging it in to something. You’ll want to start with a Dev Kit, which you’ll find under “Related Products.” For more on the other boards in the Jetson series, see NVIDIA’s comparison page.

Intel Neural Compute Stick 2 (NCS2)

The Intel Neural Compute Stick 2 (NCS2), released in 2018, is another portable USB-based AI accelerator (Figure 5). Featuring the Intel Movidius Myriad X VPU, it provides approximately 1 TOPS of performance. It’s ideal for AI prototyping, research, and enhancing existing PCs with AI capabilities. Its portability and ease of use make it a popular choice for developers and researchers. However, it depends on a host PC for power and data processing and offers lower computational power compared to high-end GPUs.

PC-Based Edge AI Accelerators

NVIDIA GPUs

The NVIDIA RTX 4070 referenced above is part of the RTX 40 series released in 2022 and is a powerhouse for both gaming and professional AI applications. This high-end GPU, available on graphics cards available from several vendors, boasts 29.8 TFLOPS (29,800 GFLOPS) and 12 GB of GDDR6 memory. It’s perfect for the training and inference of large neural networks, high-performance gaming, and content creation tasks such as video editing and 3D rendering. The extensive software support and exceptional computational power make it ideal for demanding AI applications, including speech-to-text transcription and video editing. If budget allows, you can step up to the RTX 4090 for even more power, or save with an RTX 3080, which still offers substantial performance. However, its high power consumption and cost make it more suitable for your bigger desktop workstations.

With the RTX series bearing NVIDIA’s “the ultimate in ray tracing and AI” tagline, it’s still in their high end. The downside can be that when you step away from your workstation, you might have the odd eager child in the household begging to play the latest FPS (first-person shooter) game at the high FPS (frames per second) available on these beasts.

Apple M-Series Chips

For those invested in the Apple ecosystem, their M1 chip, touted as Apple’s first chip designed specifically for the Mac, was introduced in 2020. The subsequent M2 chip was launched in 2022, and they both integrate AI acceleration directly in the CPU. The M1 chip features an 8-core Neural Engine capable of 11 TOPS, while the M2 chip offers a 16-core Neural Engine with 15.8 TOPS. These chips are found in devices such as the MacBook Pro and Mac Mini, offering on-device AI processing for applications such as Siri, image processing, and augmented reality. The seamless integration with macOS and iOS makes these chips highly efficient for consumer-grade AI applications, especially for those invested in the Apple ecosystem.

The M3, M3 Pro, and M3 Max were announced in 2023 with improvements across the board. The M3 chips were the first 3-nanometer chips and featured a new GPU architecture that enabled speed improvements of up to 30% and 50% over the M2 and M1, respectively. With faster CPU performance, a more efficient Neural Engine, and a next-generation GPU architecture that supports advanced features such as dynamic caching and hardware-accelerated ray tracing, the M3 series is particularly well-suited for intensive tasks such as high-end graphics processing, AI/ML workloads, and complex computing tasks.

The paint was barely dry on the M3 announcement when Apple announced the M4 chip in May of 2024, but this one is currently only available in the company’s iPad Pro. It would be interesting to see if any third party developer comes up with a use case for dedicating an expensive iPad Pro to a machine learning commercial or industrial task.

If you do your heavy lifting in the Apple-verse, you won’t be disappointed in a MacBook with an M2 or M3 chip for your AI applications. Which chip you’ll have under the hood will depend on your budget and application.

Embedded vs. PC-Based: Which to Choose?

The choice between embedded and PC-based edge computing solutions largely depends on the specific requirements of your application.

Embedded Edge AI

- Best For: Low-power, real-time applications where size and power consumption are critical, such as smart home devices, wearables, and autonomous sensors.

- Benefits: Lower power usage, compact size, cost-effective.

PC-Based Edge AI

- Best For: Applications requiring high computational power and flexibility, such as desktop AI applications, complex data analysis, and development and testing of AI models.

- Benefits: High performance, greater flexibility, ability to handle more complex tasks.

Making the Choice

Embedded and PC-based edge AI solutions have their unique advantages. Embedded accelerators are perfect for low-power, real-time applications, while workstation/laptop-based solutions such as NVIDIA RTX-based GPUs and Apple M-series chips offer high performance for more demanding tasks. By understanding the strengths and limitations of each, you can choose the right solution for your specific needs, harnessing the power of AI to create smarter, more efficient systems.

This comparison is by no means exhaustive, but it highlights some of the most popular edge AI accelerators I’ve tried. Each has its pros and cons — the key is to choose the one best fitted to your application.

The article, "2024 An AI Odyssey: Desktop Versus Embedded Accelerators" (230181-H-01), appears in Elektor September/October 2024.

Discussion (0 comments)