Autonomous Plane Navigates Parking Garage Without Using GPS

August 15, 2012

on

on

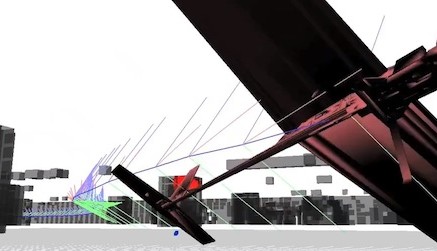

A fixed-wing drone built by MIT autonomously piloted its way through a subterranean parking garage using only on-board sensors and two sophisticated control algorithms.

Autonomous navigation in an obstacle-dense, GPS-denied environment is typically executed by rotorcrafts like quadcopters. Their slow movement gives them time to compute the data from their on-board sensors and their high maneuverability allows them to avoid objects more easily.

But Adam Bry, Abraham Bachrach, Nicholas Roy of MIT’s Robust Robotics Group insisted on flying an airplane indoors and they have now successfully completed a series of test flights as is shown in the video below.

"The reason that we switched from the helicopter to the fixed-wing vehicle is that the fixed-wing vehicle is a more complicated and interesting problem, but also that it has a much longer flight time," Roy said in the MIT press release. "The helicopter is working very hard just to keep itself in the air, and we wanted to be able to fly longer distances for longer periods of time."

The custom built airplane with a wingspan of two meters, weighs about two kilograms (4.4 lbs) and travels at a speed of 35 km/h (22 mph). It carries a payload consisting of a laser rangefinder to determine its distance to surrounding objects, inertial sensors (gyroscopes and accelerometers) and a 1.6GHz Intel Atom base flight computer for on-board computations.

Most drones relying on external positioning systems or on-board cameras can map out their environment on the go. But because flying a plane indoors poses such a difficult problem the MIT drone was given a 3D map of the environment.

But even with the map, determining the state (location, physical orientation, velocity and acceleration) of the drone using the data provided by the onboard sensors demands too much computational power from the flight computer.

To reduce the computational burden the team combined two algorithms to estimate the state of the plane [PDF].

The algorithm called the particle filter is efficient enough to determine the location of a robot navigating a 2D map but for a 3D environment the filter requires too many particles for accurate localization and loses its efficiency.

The Kalman filter on the other hand, is very efficient but is not well-suited for integrating the data from the laser rangefinder, something the particle filter does well. The team took the best of both algorithms to arrive at accurate enough state estimations.

With the next iteration of the fixed-wing drone the robotics team wants to enable it to generate its own map on the fly.

Photo: MIT

Autonomous navigation in an obstacle-dense, GPS-denied environment is typically executed by rotorcrafts like quadcopters. Their slow movement gives them time to compute the data from their on-board sensors and their high maneuverability allows them to avoid objects more easily.

But Adam Bry, Abraham Bachrach, Nicholas Roy of MIT’s Robust Robotics Group insisted on flying an airplane indoors and they have now successfully completed a series of test flights as is shown in the video below.

"The reason that we switched from the helicopter to the fixed-wing vehicle is that the fixed-wing vehicle is a more complicated and interesting problem, but also that it has a much longer flight time," Roy said in the MIT press release. "The helicopter is working very hard just to keep itself in the air, and we wanted to be able to fly longer distances for longer periods of time."

The custom built airplane with a wingspan of two meters, weighs about two kilograms (4.4 lbs) and travels at a speed of 35 km/h (22 mph). It carries a payload consisting of a laser rangefinder to determine its distance to surrounding objects, inertial sensors (gyroscopes and accelerometers) and a 1.6GHz Intel Atom base flight computer for on-board computations.

Most drones relying on external positioning systems or on-board cameras can map out their environment on the go. But because flying a plane indoors poses such a difficult problem the MIT drone was given a 3D map of the environment.

But even with the map, determining the state (location, physical orientation, velocity and acceleration) of the drone using the data provided by the onboard sensors demands too much computational power from the flight computer.

To reduce the computational burden the team combined two algorithms to estimate the state of the plane [PDF].

The algorithm called the particle filter is efficient enough to determine the location of a robot navigating a 2D map but for a 3D environment the filter requires too many particles for accurate localization and loses its efficiency.

The Kalman filter on the other hand, is very efficient but is not well-suited for integrating the data from the laser rangefinder, something the particle filter does well. The team took the best of both algorithms to arrive at accurate enough state estimations.

With the next iteration of the fixed-wing drone the robotics team wants to enable it to generate its own map on the fly.

Photo: MIT

Read full article

Hide full article

Discussion (0 comments)