Cellular: Is It the Lowest-Power Option for the IoT?

on

When tackling your next IoT project, it’s worth casting an eye over the cellular LPWAN offering. While LoRaWAN seems low power on paper, researchers have seen wild variations in battery life with real-life deployments. LTE-M and NB-IoT are both very competitive when it comes to power budgets and offer a range of other benefits on top. But, like all good things, they have their own host of challenges to deal with.

The ability of cellular networks to provide global access to connectivity became clear to me in the early 2000s. I was enjoying being a passenger during a business trip through the Swiss Alps when a colleague called, requesting a presentation for a customer meeting. With my trusty Ericsson T68i balanced on the dashboard, an early Bluetooth-enabled handset, and laptop between my knees, I quickly set about sending the file using the blisteringly fast 115 kbps offered by GPRS. Admittedly, it took several attempts due to losing the connection in the tunnels. Still, the ability to be linked to the world in the middle of nowhere indicated that the era of limitless wireless connectivity had arrived.

First Glimmer of IoT

Thanks to Bluetooth, the phone could also be accessed through a terminal window like the old wired modems that had been replaced by DSL technology. That meant that a Bluetooth-capable microcontroller and some software handling AT commands were all that was required to connect to the world. We were implementing the Internet of Things (IoT); we just didn’t know it at the time. Now, 20 years later, IoT is established, and we have even better cellular networks. But, we would probably be hard-pressed to name a product or application we know of that isn’t a smartphone or tablet using cellular for data connectivity.

One of the challenges around adoption could be the confusion the entire cellular IoT ecosystem has with its nomenclature, making it challenging to know what to choose and why. At the top level, we’re now used to the transition from 4G to 5G, but these marketing terms are only relevant to consumers and businesses for smartphone and high-speed data connectivity. For lower data rate applications used in machine communication, there are separate standards to consider.

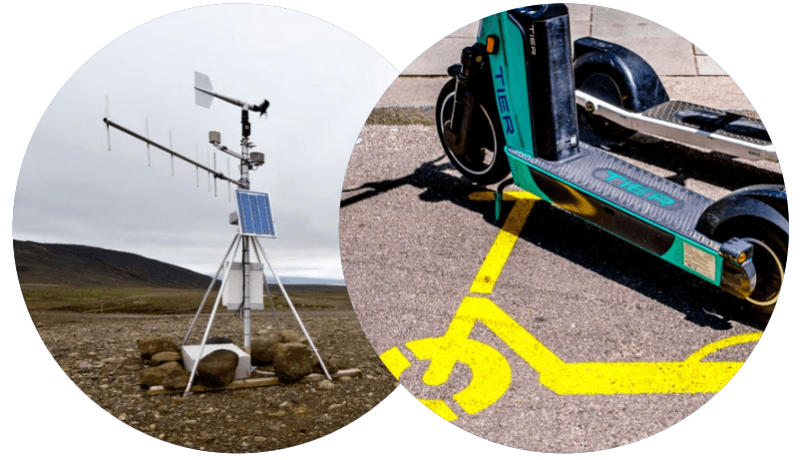

Higher Data Rate Cellular IoT

The first is LTE-M, which stands for Long-Term Evolution Machine Type Communication. This branches into two current standards, LTE Cat M1 and LTE Cat M2. The 3rd-Generation Partnership Project (3GPP) defines these standards, with new capabilities ratified in “releases.” Thus, LTE Cat M1 was part of Release 13 in 2015, and LTE Cat M2 was part of Release 14 in 2017. Cat M1 offers 1 Mbps uplink and downlink, while Cat M2 offers around 7 Mbps uplink and 4 Mbps downlink. Both support full- and half-duplex (Figure 1). For context, 5G smartphone networks average around 100 Mbps.

Part of the benefit of a lower data rate is the reduced power demands of LTE-M hardware. According to the 3GPP specification, the aim was to achieve ten years of operation on a 5 Wh battery. However, while that lifetime may be achievable, Brian Ray, an engineer now with Google, notes that achieving a 23 dBm transmit power during an uplink, the highest supported level, results in peak currents of around 500 mA. This poses a not-insignificant design challenge.

Coverage is also better than standard LTE thanks to a higher Maximum Coupling Loss (MCL). This figure defines the point at which a wireless system loses its ability to deliver its service. In a study by Sierra Wireless, an MCL of up to 164 dB for LTE Cat-M1 was determined, a significant improvement over the 142 dB of legacy LTE and much better than the 155.7 dB target that 3GPP set itself. In terms of capability, this means better connectivity inside buildings, which is important for smart metrology applications where the building can contribute a 50 dB penetration loss, and better range outside.

Like smartphones, LTE-M also supports mobile data, meaning that your device continuously connects to the nearest cell tower, making it ideal for sensors monitoring perishable goods on the move or tracking fleets of vehicles. Additionally, if you need voice occasionally as part of the system, such as in a fire alarm panel or a monitoring system for the elderly, Voice over LTE (VoLTE) is included. Finally, with a latency of under 15 ms, LTE-M could support an application that seems responsive to a human.

Cellular for Static IoT Nodes

The alternative IoT technology for cellular is NB-IoT. This also comes in two flavors. LTE Cat NB1 was formalized in Release 13, while LTE Cat NB2 has been about since Release 14. NB-IoT targets non-moving applications, such as smart meters in agriculture, weather stations, or sensor deployments at water treatment plants, as it doesn’t support cell tower handoff or VoLTE (Figure 2). Instead, there is a peak of power consumption as the device registers itself with its nearest tower, after which the wireless module can enter a sleep mode knowing that, upon wake, it can continue from where it left off.

such as a weather station, benefits from the lower power demands of NB-IoT. (Source: Shutterstock)

NB-IoT data rates are much lower than those of LTE-M. LTE Cat NB1 achieves 26 kbps downlink and up to 66 kbps uplink, while LTE Cat NB2 reaches 127 kbps in the downlink and around 160 kbps in the uplink. Unlike LTE-M, NB-IoT only supports half-duplex mode. Latency is also much higher, with 1.6 to 10 seconds typically quoted. However, considering the intended use case, responding with a new actuator setting for a greenhouse window or water treatment sluice after receiving some sensor data won’t be an issue. It should also be noted that this is much better latency than competing low-power wide area networking (LPWAN) technologies such as LoRaWAN and Sigfox.

Achieving Low-Power IoT with Cellular

Power is one of the core requirements for an IoT application, as it will use primarily either batteries or some other renewable energy source, such as a solar panel. Both of these wireless technologies support various low-power modes to give developers options for improving battery life. The first of these is PSM, or Power Saving Mode. This allows the application to place the cellular radio module in a deep sleep state, resulting in a power draw of a few microamps in most cases. The device notifies its cell tower of its intention and can then sleep for up to 413 days. During this time, there is no way to pass data to the device. However, when it wakes up, there is no need to register with the cell tower.

The next mode is eDRX, or extended Discontinuous Reception. This lighter sleep mode saves power for up to 40 minutes with LTE-M or up to three hours with NB-IoT. Waking up from sleep is also faster than PSM. Despite these power-saving levers, they are not always available in all locations. Their configuration depends on the service provider’s equipment, meaning that battery life in one country could be less than in others because a setting cannot be negotiated. Use of a service provider offering a roaming SIM may also limit access to these low-power features.

Because cellular IoT implementation varies so much and is dependent on so many factors, it’s no wonder that searching for guidance on power consumption is a fruitless task. More often than not, a Google search delivers pages stating the “10 years of battery life” statement, seemingly for both LTE-M and NB-IoT and without details on battery capacity.

Cellular IoT in the Lab

Thankfully, teams at various institutes have taken the time to study power consumption. Their results provide some guidance on what to expect and show how cellular IoT performs when compared to the alternatives. For example, Tan compares LoRa and NB-IoT. In the experiment, MQTT packets are sent, each containing 50 bytes of data. Using an optimal configuration and good connection conditions, NB-IoT requires around 200 mJ per transaction.

LoRa, on the other hand, provides the developer with more control over the transmission configuration by setting a spreading factor (SF). At SF7, the bit rate is higher, meaning less air time, while at SF12, the lowest setting, the air time is the longest when transferring the same number of bytes. The risk, however, is that SF7 reduces the range too much for a successful transfer, meaning the exchange of data must be repeated. During testing, SF7 required just 100 mJ per transaction. However, increasing to SF12 required 250 mJ.

The conclusion is that, for a 3,000 mAh battery, LoRa using SF7 could power this configuration for over 32 years, but using SF12 reduces this to just under 13 years. By comparison, using NB-IoT could deliver just under 20 years of operation. Considering that, under real-life conditions, LoRa would need to adapt its SF and other transceiver configuration (bandwidth) to maintain successful data transfers, such a variation in battery life may be considered too risky.

Comparing with LoRaWAN

This risk is also highlighted in research from a team at the University of Antwerp. In their conclusion, they also state that, while LoRaWAN consumed the lowest power under controlled laboratory conditions indicating an application life of years, “with the real time [sic] deployment it narrowed down to a few months.” They also tested NB-IoT alongside Sigfox and DASH7. While DASH7 offered even better power consumption than LoRaWAN under the same conditions, the team surmised that NB-IoT may still be the better option despite the slightly higher power consumption. NB-IoT may simply tick more boxes when factoring in everything an IoT application requires, such as availability, latency, coverage, security, robustness, and throughput.

Wireless technology has been democratized over the past two decades thanks to CMOS radio transceivers, highly integrated radio modules, and tiny antennas. Even the software stacks are often freely available. However, designers often only work on the end node, relying on others for the infrastructure they’ll connect to. Despite LTE cellular networks’ ubiquitousness and ease of use for smartphone users, the same cannot be said for those hoping to rely upon it for IoT.

Not for the Faint-Hearted

For the uninitiated, it’s challenging finding reliable guidance on what cellular IoT can and can’t do, whether important power-saving features are available globally, or how to determine their availability. As such, it makes cellular IoT look like the poor sibling of the smartphone industry. Recent news that Vodafone wants to sell its business focused on IoT services doesn’t help shake this image. Although they sold 150m IoT SIM connections last year, that division only makes up 2% of their service revenue.

Although research shows cellular IoT to be competitive in power consumption and battery life compared to alternative LPWAN solutions, it still has its foibles. With network infrastructure from some providers and in some countries failing to implement power-saving support universally, engineers will clearly be uncomfortable committing to battery life values that are attractive to customers. What is clear is that each LPWAN approach has disadvantages, and design teams need to weigh them all up based on each use case. And, it would seem a healthy portion of research time is also needed to achieve, as far as possible, an apples-to-apples comparison of the LPWAN solutions that fit the bill most closely.

Editor's note: This article (230376-01) appears in Elektor Mag Sep/Oct 2023.

Questions About Cellular or This Article?

Do you have technical questions or comments about this article? Email the author at stuart.cording@elektor.com or contact Elektor at editor@elektor.com.

Discussion (1 comment)