TensorFlow Lite for Microcontrollers: An Introduction

on

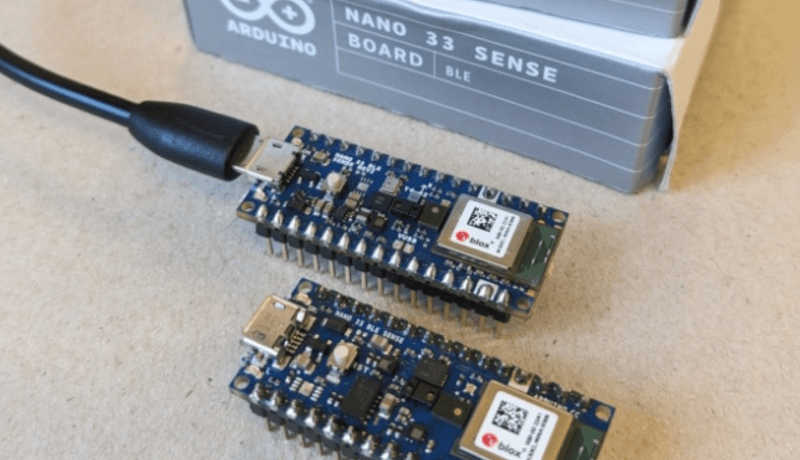

With TensorFlow Lite for Microcontrollers, you can run machine learning models on resource-constrained devices. Want to learn more? You can use it with Edge Impulse for speech recognition on an Arduino Nano 33 BLE Sense.

AI on the Edge

Artificial intelligence (AI) and machine learning (ML) are the new buzzwords, and sometimes they’re being misused interchangeably. Facebook, Amazon, Google and many others are using ML systems to provide you with content tailored as closely as possible to your tastes and habits. ChatGPT is another example of a very spectacular and popular service using ML. What these companies have in common is access to servers with huge computing power to train the models by processing gigantic volumes of data, and to respond fluidly to queries from a large number of users.

This is changing, however, with the emergence of AI “on the edge.” Edge AI refers to the deployment of artificial intelligence algorithms and processing at the edge of the network, which...