Hubs Become Central to the IoT

|

Spotted @ Hot Chips: Mediatek Helio X20 series

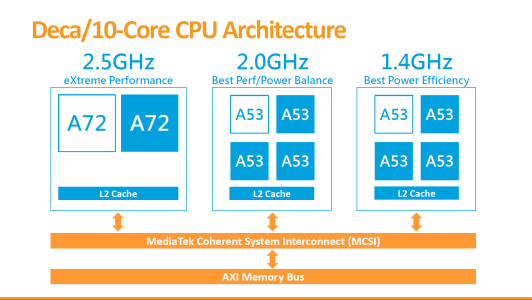

Last year’s Hot Chips conference gave a look at a mobile application processor design that hinted at an answer to the need for processing power. From Mediatek — the chip aimed at a compromise between performance and energy efficiency. Starting from ARM’s big.LITTLE concept, the Mediatek designers came up with a 10-core processing subsystem arranged in three clusters. The X23 has four low-power, 1.4-GHz ARM® Cortex®-A53 cores in one cluster, four 1.85-GHz A53 cores in a second cluster, and two speed-optimized 2.3-GHz A72 cores in a third. All share a hierarchical coherent interconnect and a dynamic task scheduler. The 10-CPU cluster should be able to move gracefully and on the fly from MCU-like power efficiency to, given enough threads, near server-class compute performance, while executing a mix of real-time and background tasks. That is, after all, what an advanced smart phone requires, and that sounds a great deal like what we want from our hub.

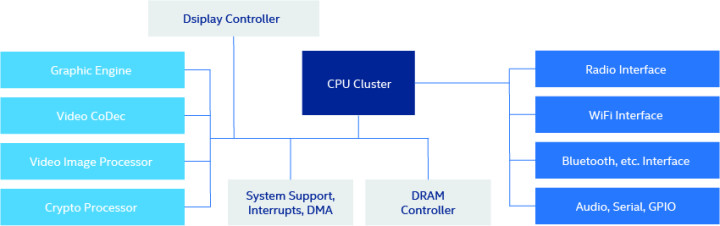

Since this cluster is embedded in a smart-phone SoC, it will be accompanied by a GPU, a cellular modem, Wi-Fi and Bluetooth support, near-field radio (NFC), and security hardware. Given the volumes cellphone SoCs reach in production, pricing should be aggressive. So these SoCs can be very attractive bases for IoT hubs. |

From a distance, this could sound like a description of a very different kind of device: a smartphone (Figure 2). And in fact there is considerable interest in using smart phones, or even a subset of a smartphone chip set, as a hub in control applications. The Internet connectivity is already in place via either Wi-Fi or the cellular network, at least some of the needed local wireless link support is there, and Android provides an open platform that is relatively easy to extend. But what about processing power?

Power to the edge

Ten CPU cores as used in the Mediatek LTE platform (inset) might seem massive overkill for a hub that is basically just reading sensors, executing a control algorithm, sending commands to actuators, and serving as a firewall. Granted, the number of CPUs is larger because of Mediatek’s little-medium-big strategy, and the plethora allows you to lock a time-critical task to a dedicated CPU if you need to. But more than that, the abundance of processing power serves a trend. Algorithms are getting more complex.

You can see the trend in more sophisticated control functions and in, for example, use of Kalman filters, with their intense matrix arithmetic, in sensorless motor control and battery management. But with the resurgence of machine learning, the trend is about to blossom.

Among the earliest manifestations of this resurgence was vision processing. Designers recognized that — quite apart from its obvious uses in surveillance and automotive driver assistance — image classification could often be the most effective way to estimate the state of a system. One camera can see what it might take hundreds of sensors to measure. An early application used fixed cameras to observe a street, and image processing to determine which parking spaces were occupied, replacing dozens of buried sensors and hundreds of meters of underground cable.

The ascendency of convolutional neural networks (CNNs) as the most successful image-classification algorithm has led to use of CNNs, and exploration of other forms of deep-learning networks such as recurrent neural networks, in IoT hubs. The evaluation of such models quickly uses up CPU cores. That leads to interest in many-core processors and in hardware accelerators, such as GPUs or FPGAs, for the hubs. And that brings us to a second Hot Chips paper.

At the conference, Movidius described a deep-learning SoC — essentially, a collection of fixed-function image processors, RISC CPU cores, vector processors for matrix arithmetic, and memory blocks, all optimized for evaluating deep-learning networks. The company claimed performance superior to that of two unidentified GPUs, but at low-enough power to need no fan or even heat sink.

Shifting concepts

We’ve watched an evolution from connected local controllers to smart hubs to hubs hosting deep-learning networks. This may prove a long-term solution for systems that can be satisfactorily managed using only their current observable state as input. But there is growing interest in going beyond this concept, to systems that can call on not only their own state, but upon history, and even upon unstructured pools of seemingly unrelated data. This is the realm of big-data analysis.

Examples of the use of big-data techniques in system management predate the current popularity of deep learning. Machine maintenance systems have used big-data analyses of operating history to identify predictors of impending failure, for example, or to track down the location of parts from a suspect lot. The gradual blending of traditional big-data techniques, such as statistical analyses and relevance ranking, with deep-learning algorithms will only promote the importance of cloud-based analyses to embedded systems.

That does leave us with several questions. First, how much does the big-data algorithm need to know about the state of the system, and in how timely a manner? The presumption in most marketing presentations seems to be that the system will continually log all of its state to the cloud. That is how you get a PowerPoint slide saying that a smart car generates 25 GB per hour of new data. But it seems far more likely that the IoT hub will filter, abstract, represent, and prioritize the state information, reducing the flow significantly.

Another question involves performance in the cloud. If the cloud-based analysis is being done to predict next month’s energy consumption or to schedule annual maintenance, there is no great hurry. If it is part of a low-frequency control loop or a functional-safety system, there are hard deadlines. And that is where the third Hot Chips paper comes in.

Baidu presented a software-defined, FPGA-based accelerator for cloud data centres, intended to slash execution time for a wide variety of big-data analyses. Baidu’s specific example was an SQL query accelerator — which would be useful in about 40 percent of big-data analyses, the presenter said. But the reconfigurable architecture would be applicable across a wider range of tasks. Thus acceleration, particularly if used with a deterministic network connection, could extend the usefulness of big-data algorithms in control systems, working hand-in-hand with smart hubs.

Conclusion

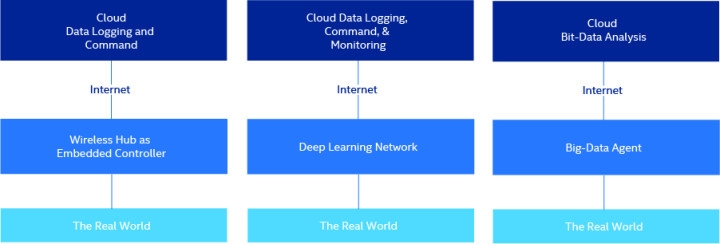

We have seen how practical issues such as bandwidth, latency, or security, argue in favour of smart IoT hubs. Once the hub is smart, at least three quite different architectures become interesting:

- the hub as connected system controller;

- the hub as deep-learning controller;

- and the hub as agent for a cloud-based big-data system (Figure 3).

Some combination of the three should be right for just about any connected embedded system.

Read full article

Hide full article

About Ron Wilson

Ron Wilson, a long-time technology editor, follows emerging system design issues and creates, edits, and curates technical content for Intel PSG. >>

Discussion (0 comments)