Physics stimulates AI methods

on

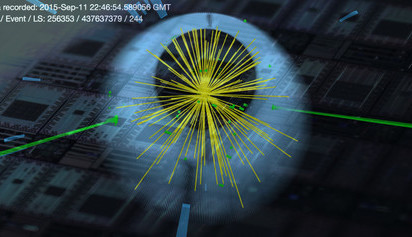

Researchers from the California Institute of Technology (Caltech) and the University of Southern California (USC) are the first to successfully apply quantum computing to a physics problem. Using quantum-compatible machine learning methods they have found a way to distill the signal of a rare Higgs-boson from a whole lot of 'noise'.

The Higgs-boson is responsible for the mass of the elementary particles; the existence of which was experimentally demonstrated only in 2012.

The new method also appears to work well with small data sets – in contrast to conventional methods. The researchers (under the leadership of professors Maria Spiropulu and Daniel Lidar) programmed a so-called quantum annealer — a type of quantum computer that is capable of running optimization programs – to search for patterns in the dataset, with the intention of separating the useful data from the noise and 'junk'.

A popular existing method to classify data is the neural network method, which is known as an efficient method for finding rare patterns in a dataset. However, the patterns that are found with this method are difficult to interpret, because the classification process does not indicate how these patterns were found. By contrast, techniques that allow for better interpretation make more mistakes and are less efficient.

The existing methods for classifying data depend to a large extent on the size and quality of the dataset that is used as training material – part of the entire dataset that was sorted by hand. In modern high-energy physics this 'sorting by hand' is however problematic because particle accelerators such as the Large Hadron Collider (where the Higgs-boson was discovered) produce enormous amounts of data in which the rare events are hidden. The new quantum program is, as professor Spiropulu remarks, simpler and is satisfied with a very small amount of training information.

The researchers have published their findings in Nature.

(Video: D-Wave Systems)

Discussion (0 comments)