ESP32-Based Personal AI Terminal with ChatGPT

on

Whether AI solutions such as ChatGPT can pass the Turing test is still up for debate. Back in the day, Turing imagined a human operator judging replies to questions sent and received using an electromechanical teletype machine. Here we build a 21st-century version of Turing’s original experimental concept, using an ESP32 with a keyboard and TFT display to communicate exclusively with ChatGPT via the Internet. In addition, Google text-to-speech together with a tiny I2S amplifier module and speaker lets you listen in to the conversation. In our case, it’s obvious from the start that we are communicating with a machine — isn’t it?

There is no doubt that AI tools such as OpenAI’s ChatGPT and Google’s Gemini can be real game changers in so many situations. I have used ChatGPT to develop quite complex control solutions. I provide the initial idea, and as I give more inputs, it refines the code, making it better with each iteration. It can even convert Python code to MicroPython or an Arduino sketch. The key is to guide the process carefully to prevent it from straying too far off course. There are times when it does deviate and repeats the same mistakes, but I actually enjoy finding these bugs and steering ChatGPT’s output to more closely match my target outcome.

AI Terminal Hardware

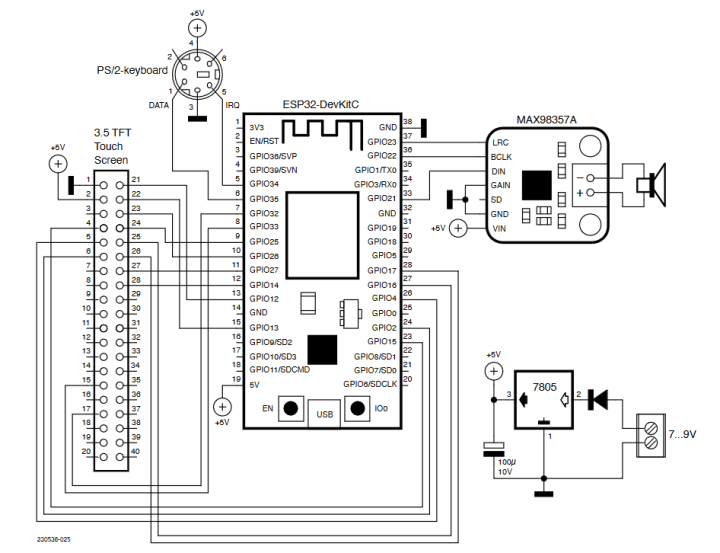

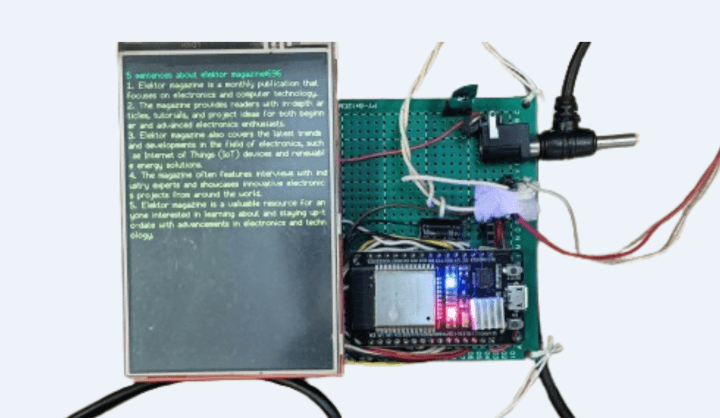

The heart of this AI terminal build is Espressif’s ESP32 development module. Its dual-core architecture has enough processing reserve to take care of Wi-Fi communications, handling the serial input from a PS2 keyboard, sending data to the 3.5-inch TFT display and outputting digital audio data to the I2S module. The complete schematic for the ChatGPT terminal is shown in Figure 1.

The 3.5-inch TFT touch display used has a parallel interface rather than the alternative SPI version. This inevitably uses more interconnect wires, but in this application, we have enough spare GPIOs and the interface offers a significantly faster response time. The touch screen features are not used in this application.

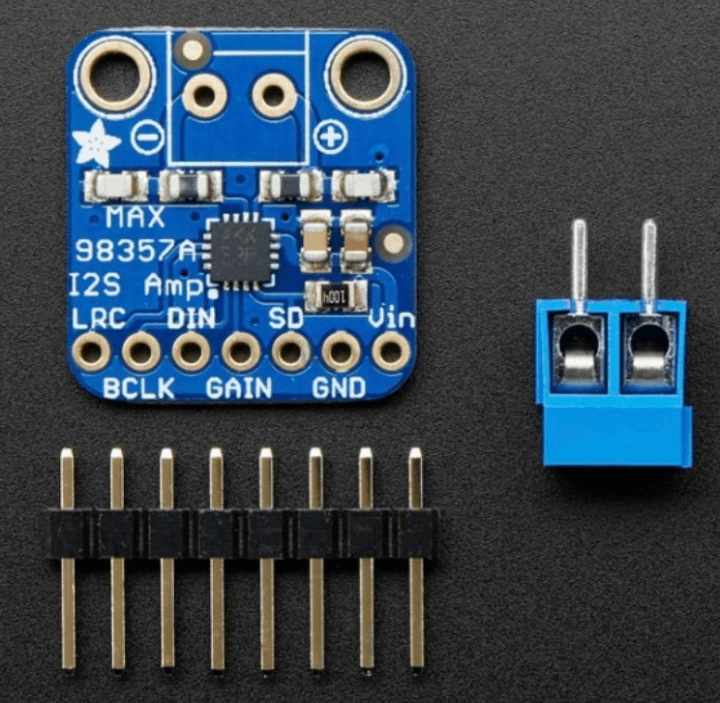

A MAX98357A I2S amplifier (Figure 2) module takes the digital audio I2S signal generated by the ESP32 and converts it into analog audio. A built-in, 3-W class D amplifier boosts the signal to achieve a good level of sound. The gain input is tied to ground, which produces maximum volume from the amp. The class D amp output can drive a 4-Ω speaker.

Don’t Lose the Key

ChatGPT was developed by OpenAI. It generates text-based responses to prompts or questions entered by the user. Usually, it works through a browser interface. The OpenAI API has been designed for use by developers and businesses so that they can integrate AI features into their own applications, software, or websites. It’s not just a chatbot interface, but a flexible programming interface allowing businesses to embed chatGPT’s capabilities into their products. Developers send data to the API programmatically and get responses back. To use the OpenAI API, you will need a unique key, which is generated for your account when you sign up. Go to the OpenAI website span> and click on the Sign up button.

Fill out the registration form with your email address, password, and other required information. Once you have entered these, navigate to your OpenAI account dashboard and click on the New Project button. Give your project a name and description (optional). In your project settings, click on the API Keys tab. You’ll see a list of existing secret keys (Figure 3). Click the Create new secret key button to generate a new key. Make a copy of this generated API key and save it securely, as you won’t be able to retrieve it again for security reasons. You’ll need this key to authenticate your applications with OpenAI’s services. On their site there is also a developer’s quickstart tutorial which guides you through the process of generating a response via the API using the generated key to authorize your access.

At the time of writing, OpenAI provides free credits when you first sign up, which you can use to experiment with the API. After you use up all these credits, you will need to pay based on your usage. If you haven’t already set up a payment method for billing, it will be necessary to do so. Read through OpenAI’s usage guidelines and terms of service.

Text to Speech

A Text to Speech (TTS) API is used to convert the textual response from OpenAI into a digital audio data stream. There are a couple of text-to-speech APIs we can use for this. OpenAI has its own TTS API offering a number of different voice alternatives which sound quite natural. To access this API, you use the same key you were allocated to use the OpenAI API.

For this project, we make use of the Google Cloud Text-to-Speech API. It offers a diverse range of voices in various languages and dialects. Compared to OpenAI TTS the voices sound a little more mechanical. Long text strings make the ouput break up. This API is however free to use at the time of writing, whereas OpenAI TTS API incurs a charge.

To start using the Google TTS, we first need to create the project on Google Cloud and enable the Google TTS API to get our API key. Text strings can now be posted to the API using an HTTP POST request along with the key. The resulting I2S digital audio stream is then stored and played back to produce an analog audio signal via the MAX98357A.

Software: Libraries

The Arduino sketch is included. Check all the libraries referenced in the header of the sketch listing to make sure they are installed in your environment, if not install them now using the Arduino IDE library manager. Using the methods available in the Audio.h library, it was a simple job to produce the audio output to accompany the word output written to the TFT display. It was only necessary to add a few lines in the loop to generate the audio. Check it out in the Arduino sketch.

#include <PS2Keyboard.h>//Keyboard specific

#include <WiFi.h>

#include <HTTPClient.h>

#include <ArduinoJson.h>

#include <SPI.h>

#include <TFT_eSPI.h>// Hardware-specific library

#include "Audio.h" //Audio header file

// GPIO connections to I2S board

#define I2S_DOUT 21

#define I2S_BCLK 22

#define I2S_LRC 23

Audio audio; //audio instance creation

The ArduinoJSON.h is used to parse the JSON-formatted response data from the OpenAI API into a format that can be used by the Arduino code.

Software: ChatGPT API request

The process of interacting with the OpenAI API is contained in the makeApiRequest(String prompt1) function:

1. First, we set up an HTTP client:

HTTPClient http;

http.setTimeout(24000); // 24 seconds timeout

http.begin("https://api.openai.com/v1/chat/completions");

http.addHeader("Content-Type", "application/json");

http.addHeader("Authorization", "Bearer " +

String(api_key));

2. Next, prepare the payload which will be JSON formatted:

StaticJsonDocument<1024> jsonPayload;

// setting a maximum size of 1KB

jsonPayload["model"] = "gpt-3.5-turbo";

// model is gpt-3.5-turbo,

// change if you have access to 4 or 4.5

jsonPayload["temperature"] = 0.8;

// randomness of response, the higher

// the value, the higher the randomness

jsonPayload["max_tokens"] = 2000;

// maximum words & punctuations

// limit to be generated by response

3. Prepare the message (a nested array of messages), serialize to JSON format and send the request:

JsonArray messages = jsonPayload.

createNestedArray("messages");

//put in a nested format not random

JsonObject userMessage = messages.createNestedObject();

userMessage["role"] = "user";

userMessage["content"] = prompt1;

String payloadString;

serializeJson(jsonPayload, payloadString);

4. Send the request and receive the response:

int httpResponseCode = http.POST(payloadString);

//transfer to the open page

if (httpResponseCode == HTTP_CODE_OK) {

String response = http.getString();

// if everything goes OK get the

// reply = output and put in a string

...

5. The web page will generally include a jungle of spurious information (all in HTML code) which is not useful for our application and can be stripped off. Here we normalize the response — remove the tags JSON etc. so that it can read on the TFT display:

StaticJsonDocument<1024> jsonResponse;

//parse the tags etc of the response string.

deserializeJson(jsonResponse, response);

// and put in a simple stripped string & return

String assistantReply = jsonResponse

["choices"][0]["message"]["content"].

as<String>();

// select the first part which contains our reply

return assistantReply;

Software: Setup and Loop

In the setup function we are initialising the TFT, I2S board, connected to the Internet with our Wi-Fi credentials.

void setup() {

delay(300);

…

audio.setPinout(I2S_BCLK, I2S_LRC, I2S_DOUT);

//I2S board initialised

audio.setVolume(50);

Inside the loop function, we sent questions to ChatGPT. When the session is complete we remain inside loop:

String response = makeApiRequest(msg);

// sent to Chatgpt

...

if (l1>200) {

response = response.substring(0, 200);

// truncate first 200 characters

}

audio.connecttospeech(response.c_str(), "en");

//speak up the 200 characters

The Google TTS has a 200-character limit and will not output any text beyond this limit. To work around this, the answer string is trimmed to 200 characters for Google TTS. This ensures that while the full answer is displayed on the screen, only the first 200 characters are vocalized. For longer answers, the screen scrolls, but this can be adjusted by making slight modifications to the sketch.

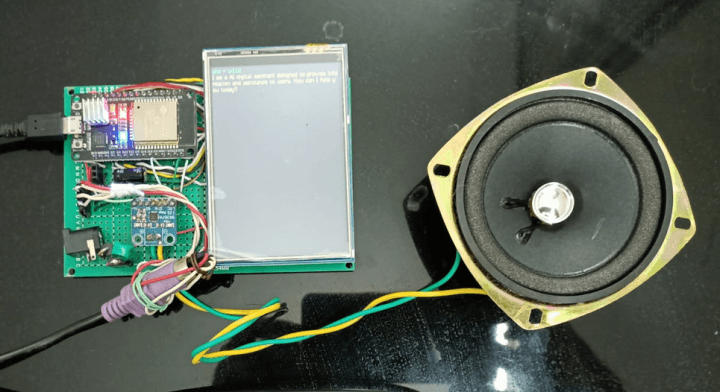

AI Terminal Project Testing

The delays I’ve used inside the software loops are quite specific. You may adjust them, but I recommend starting with the default values used in the code. Once you’re comfortable with the responses, feel free to tweak them. I began with simple questions like “Who r u?” ChatGPT responded appropriately, displaying the introduction on the screen, while the speaker articulated it clearly.

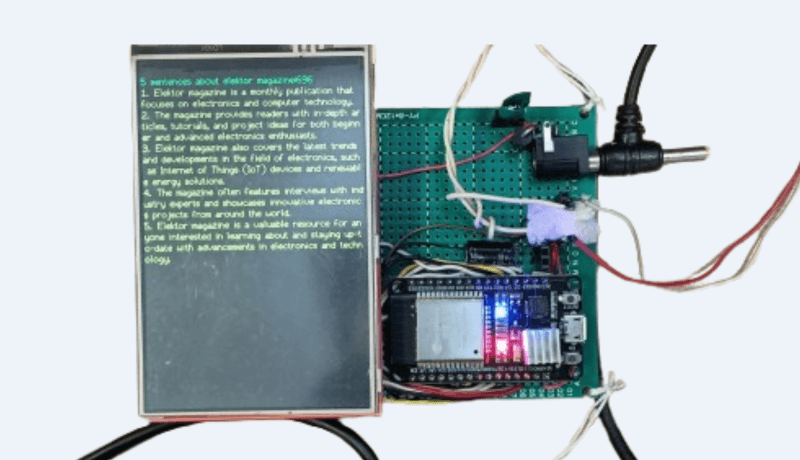

I then tested the system using questions such as: “Write 5 sentences about Elektor magazine” (Figure 4) and even asked it to write blink sketches for Arduino UNO, ESP32, and Raspberry Pi Pico.

In every case, ChatGPT performed flawlessly, understood the context perfectly and answered accurately, with the speaker delivering the voice output loud and clear.

To Wrap Up

All files associated with this project can be found here. The Internet and web browsers such as Google have totally revolutionized our access to information and replaced those bulky encyclopedias that used to line our bookshelves at home. Now we see the rise of AI software and machines based on ChatGPT API, TensorFlow Lite Micro, Edge Impulse, OpenMV, TinyML which are poised to disrupt existing business models and more traditional methods of problem-solving. We live in interesting times.

Questions About the AI Terminal?

If you have technical questions or comments about the AI terminal, feel free to contact the author by email at berasomnath@gmail.com or the Elektor editorial team at editor@elektor.com.

This article (230536-01) appears in the Guest-Edited AI 2024 Bonus Edition of Elektor.

Discussion (1 comment)