The Machine has a single memory of 160 terabytes

May 23, 2017

on

on

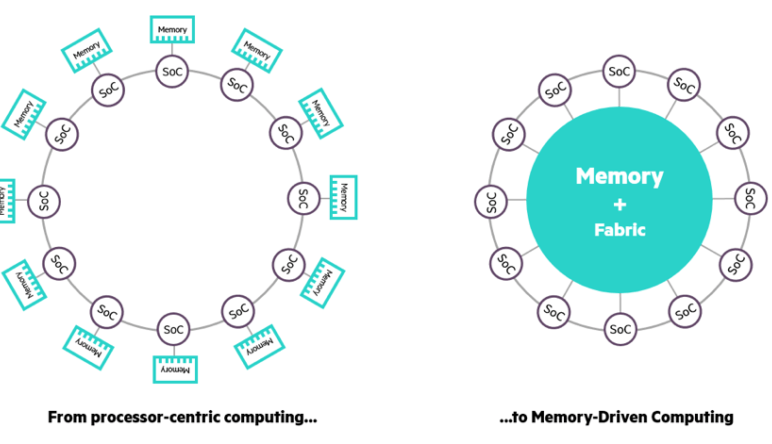

Even though computers are still getting faster and faster every day, they just can’t keep up with the enormous amounts of data that we produce. The reason for this is that these computers are still based on an architecture that was developed some sixty years ago. In this architecture the processor is the central piece, memory comes second. To solve this problem researchers are working on a new computing paradigm called Memory Driven Computing.

In Memory Driven Computing the memory is the central piece, the processor comes second. The idea is to share one huge pool of memory between a large number of processors. Researchers at HP unveiled a first prototype of such a computer, called “The Machine”, in November of 2016, now they announce a version with 160 TB of memory. They do not intend to stop here though as they think that a single memory of 4,096 yottabytes will be feasible (250,000 times the entire digital universe today). The Machine is the largest project ever done at HP.

Illustration: source HPE

In Memory Driven Computing the memory is the central piece, the processor comes second. The idea is to share one huge pool of memory between a large number of processors. Researchers at HP unveiled a first prototype of such a computer, called “The Machine”, in November of 2016, now they announce a version with 160 TB of memory. They do not intend to stop here though as they think that a single memory of 4,096 yottabytes will be feasible (250,000 times the entire digital universe today). The Machine is the largest project ever done at HP.

Illustration: source HPE

Read full article

Hide full article

Discussion (0 comments)